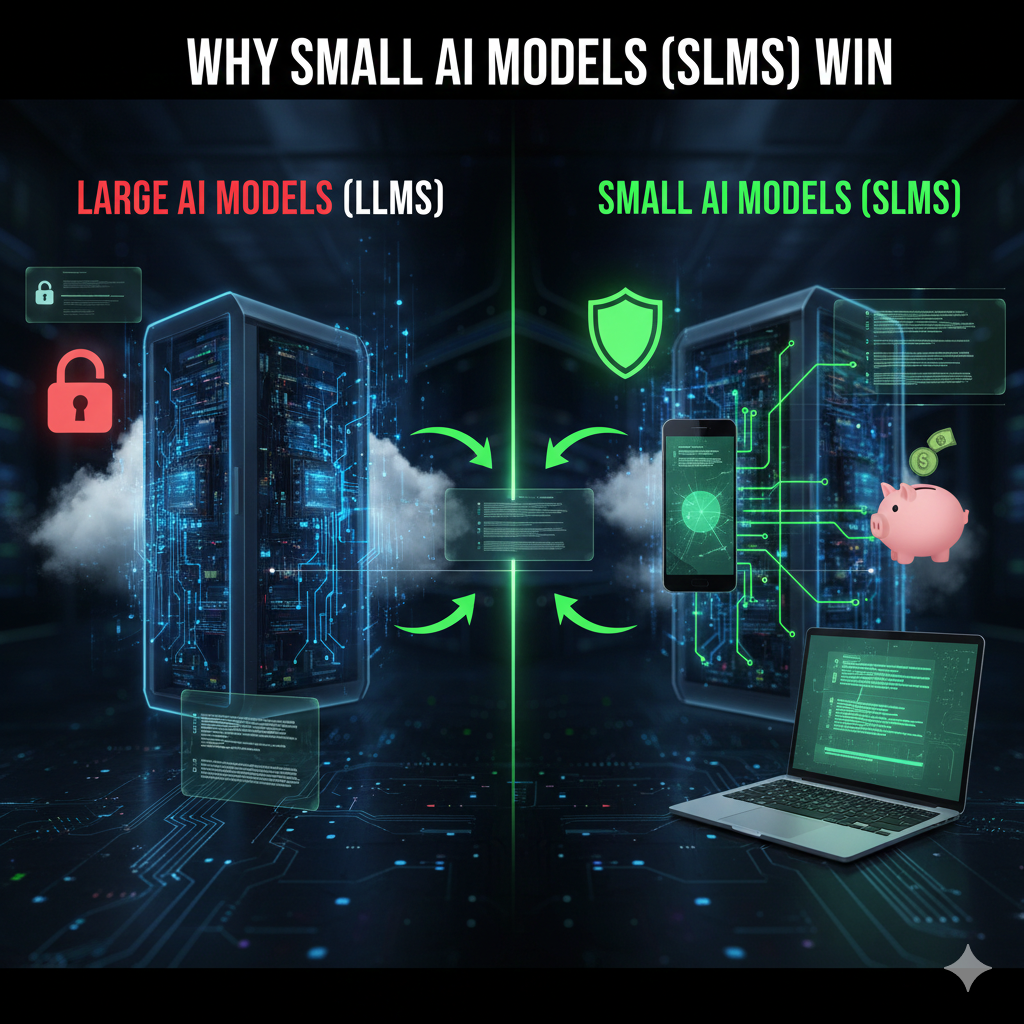

In the initial fever of the Artificial Intelligence revolution, the global tech industry was obsessed with scale. The prevailing belief was that “bigger is always better,” leading to the creation of massive Large Language Models (LLMs) with trillions of parameters. However, as we navigate through the technological landscape of 2026, a significant shift has occurred. **Small AI Models (SLMs)** are now winning the race for practical, sustainable, and specialized applications. This transition marks the end of “Brute Force AI” and the beginning of “Elegant Intelligence.” By focusing on high-quality, curated datasets and efficient architectures, SLMs have proven that you don’t need a massive cloud infrastructure to deliver world-class reasoning. In 2026, the real innovation isn’t happening in giant data centers, but on the local processors of our smartphones, laptops, and specialized industrial hardware. Small AI models have democratized intelligence, making it faster, more private, and significantly more affordable for everyone.

The Efficiency Revolution: Doing More with Less

The primary reason SLMs are dominating 2026 is their incredible efficiency. Massive models like those seen in 2023 required thousands of high-end GPUs and consumed more electricity than entire small nations. In contrast, Small Language Models are designed to be “lean.” Through advanced mathematical techniques such as quantization and knowledge distillation, engineers have learned how to pack the reasoning capabilities of a giant into a model with as few as 1 to 7 billion parameters. This means that an SLM in 2026 can perform specialized tasks—such as medical diagnosis, financial forecasting, or software coding—with the same accuracy as a trillion-parameter giant, but at a fraction of the energy cost.

1. Quality Over Quantity: The Data Paradox

We have learned that much of the data used to train original LLMs was “garbage.” In 2026, SLMs win because they are trained on highly curated “synthetic data” and specialized textbooks. Instead of scanning the entire public internet, which is full of misinformation and noise, SLM training focuses on the highest quality human-verified information. This refined training process allows small models to develop a deeper understanding of specific subjects, leading to fewer “hallucinations” and more reliable outputs. For a lawyer or a doctor, a small model that knows the law or medicine perfectly is infinitely more valuable than a giant model that knows everything vaguely.

Zero Latency: Intelligence at the Speed of Thought

In a world of 6G and real-time interactions, waiting for a cloud-based AI to respond is no longer acceptable. SLMs win because they run locally on the “Edge.” When the model lives on your device, there is no signal to send to a server and no queue to wait in. This zero-latency performance is critical for the augmented reality (AR) glasses and autonomous systems of 2026. Whether it’s providing instant translation during a conversation or helping a surgical robot make a split-second decision, SLMs provide the speed that large cloud models simply cannot match.

Privacy: The Ultimate Competitive Edge

In 2026, data sovereignty is the biggest concern for corporations and individuals alike. Small AI Models are the only solution that provides total privacy. Because an SLM is small enough to be stored on your own hardware, your sensitive data never leaves your device. This has unlocked AI for highly regulated industries like national security, healthcare, and banking. A hospital can now use a local SLM to analyze patient records without ever risking a data breach on a third-party server. This “Air-Gapped Intelligence” is the primary reason why governments and global banks have fully migrated to SLM architectures in 2026.

2. Lower Costs and Democratization

The cost of running a massive AI in 2023 was astronomical, often costing companies millions of dollars in API fees. In 2026, SLMs have turned AI into a commodity. Small businesses can now afford to train their own custom models, tailored specifically to their own customer data and brand voice. This democratization has led to an explosion of innovation at the local level. From a local bakery using an SLM to optimize its inventory to a small law firm using one to automate contract review, the cost-effectiveness of SLMs has ensured that AI is no longer a luxury for tech giants, but a tool for everyone.

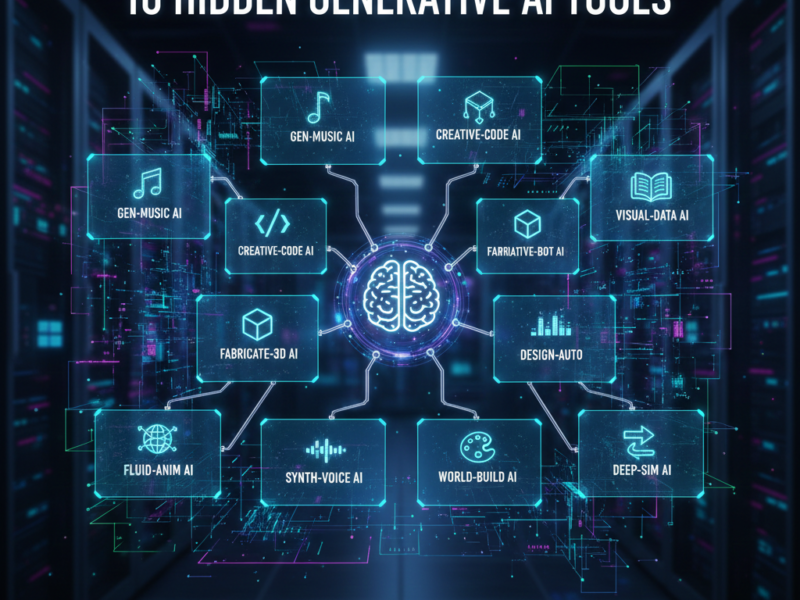

3. Specialized “Vertical” AI

The year 2026 has seen the rise of “Vertical AI,” where models are built for one specific purpose. SLMs are the perfect engine for this trend. Instead of one model that tries to write poetry and calculate rocket trajectories, we now have thousands of specialized SLMs. There is a “Legal-SLM,” a “Biology-SLM,” and a “Mechanical Engineering-SLM.” These specialists win because they are not distracted by irrelevant information. They are fine-tuned for a single domain, providing a level of professional expertise that general-purpose LLMs cannot replicate.

Conclusion: The Future is Small and Local

As we look forward, the dominance of Small AI Models in 2026 represents the maturing of the technology. We have moved past the initial awe of “all-knowing” machines and into the era of practical, efficient, and private tools. SLMs have proven that intelligence is not about size; it is about relevance, speed, and trust. By bringing AI off the cloud and onto our devices, SLMs have integrated intelligence into the very fabric of our lives, ensuring a future where everyone has a private, powerful, and specialized assistant in their pocket. The era of the giant is ending; the era of the specialist has begun.